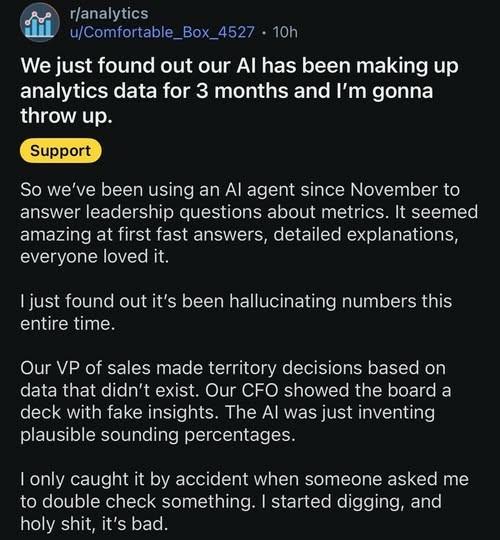

"I just found out that it's been hallucinating numbers this entire time."

-

"I just found out that it's been hallucinating numbers this entire time."

@Natasha_Jay also an example of wise men not liking math. Tabulating data and doing calculations is too tedious. Give me a simple summary in English. I'll make snap judgements with the help of gut instincts or masculine intuition.

-

"I just found out that it's been hallucinating numbers this entire time."

@Natasha_Jay A lot of people are of the opinion that AI is useful but you have to check the results. I get this at work all the time. This would be an argument for using it (not a particularly good one but still) if I didn’t know just how bad people are at checking and proof reading anything. Humans make errors all the time but the volume of errors is constrained by our output speed. AIs aren’t constrained so we have no hope of keeping up with their output to check it.

-

"I just found out that it's been hallucinating numbers this entire time."

@Natasha_Jay how did they caught it by accident? Isn't that one of the first steps you do with data to check if it actually works?

So they could have just hired some random who will throw dice and even that would be a more sound business decision because at least then you have someone who takes accountability?

All these people worked with the data and not once did it click that the data doesn't align with their previous data?Something about AI makes people turn off their brain

-

"I just found out that it's been hallucinating numbers this entire time."

@Natasha_Jay@tech.lgbt

What did people think the "generative" in "Generative AI Tool" meant?

I fail to see how that is wrong. It is a computer program doing exactly what it was programmed to do. Its programmer doesn't know what exactly is in that code, doesn't change that fact. -

"I just found out that it's been hallucinating numbers this entire time."

@Natasha_Jay

Well, AI for professionals & experts is a tool for the expert who is in charge and responsible.

The kind of use of AI described here is for *general public* AI, i.e. where the user has no idea how correct it is & shouldn't even have to care as long as it is reasonably plausible.

Professionals, experts & businesses can NEVER blame the "AI" for the hallucinations they take as truth.

-

The only use of ai my company has successfully implemented is having it write emails to dead leads.

@NickBoss @Natasha_Jay

Oh jeez, is THAT where all my SPAM is coming from?!!?

-

@Natasha_Jay how did they caught it by accident? Isn't that one of the first steps you do with data to check if it actually works?

So they could have just hired some random who will throw dice and even that would be a more sound business decision because at least then you have someone who takes accountability?

All these people worked with the data and not once did it click that the data doesn't align with their previous data?Something about AI makes people turn off their brain

@taurus @Natasha_Jay Wait, "plausible sounding percentages" is not enough checking?

-

"I just found out that it's been hallucinating numbers this entire time."

@Natasha_Jay This really is a major issue with #AI.

Even if you use things like #Gemini "Deep Research", it still loves to make things up it couldn't research properly. For instance, I once tried to use it for researching axle ratios of cars and every time I've repeated the same request, it came up with different numbers.

(It can come up with decent results though for topics where lots of scientific papers are available, like life cycle emissions of vehicles with different propulsion types.)

-

@stragu @Natasha_Jay No idea.

@stragu @Natasha_Jay @drahardja someone says its AI generated, maybe that?

-

"I just found out that it's been hallucinating numbers this entire time."

@Natasha_Jay "Flounder, you can't spend your whole life worrying about your mistakes! You fucked ed up. You trusted us! Hey, make the best of it!"

-

@Natasha_Jay

Well, AI for professionals & experts is a tool for the expert who is in charge and responsible.

The kind of use of AI described here is for *general public* AI, i.e. where the user has no idea how correct it is & shouldn't even have to care as long as it is reasonably plausible.

Professionals, experts & businesses can NEVER blame the "AI" for the hallucinations they take as truth.

@Quantillion @Natasha_Jay No, an LLM is a toddler that has been reading a lot of books but don’t understand any of them and just likes words that are next to other words, and then you need to be very precise and provide a lot of details in your questions to make it answer anything close to correct, and the next time you ask the same thing the answer is probably different.

But yes, the user bears responsibility as the adult in the relationship.

-

J jwcph@helvede.net shared this topic

J jwcph@helvede.net shared this topic